You’d be surprised how difficult it is to implement realistic artificial stupidity. With proper positive-objective AI you at least have a well-defined heuristic you can use for a target function, fitness function, what have you. If you’re looking for purposefully suboptimal solutions in the same high-complexity problem space while still maintaining control over the outcome you’re going to be reduced to dealing with stochastic solutions at best.

I mean yes you could just arbitrarily use shitty evaluation functions or poor minimax things, but that’s making bad AI by reducing the problem space, essentially. Making a shitty AI that’s still complex in the way a fluffy is complex is far more difficult. They may be stupid but sometimes their reasons for being stupid are not that simple, at least not from the point of view of constructing a logical system to represent the argument or motivation. Especially since fluffies are primarily driven by their id.

Welcome to FC, do I smell something cooking?

What are you doing the ai with, lad? I’m doing something similar but in ue4

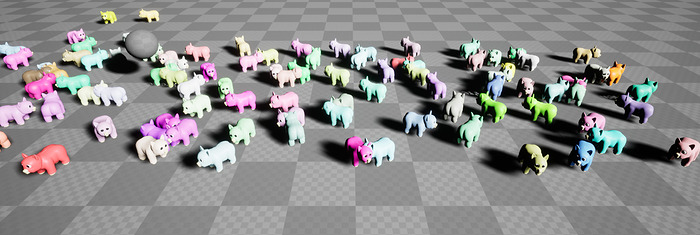

Just a simulation. I haven’t explored the space before and there’s a more advanced project I’d like to work on. This will never be finished, but the current goal is to be able to have 100 fluffies on a small island. Currently working on their “memory” to a point where they’ll be able to forage for food.

Since I’ve never explored game AI in depth before there’ll be hardly any optimizations. I’ve seen your work it’s very cool. My work doesn’t involve any player interaction though. Basically just going to be a glorified ant farm.

Thats dope. Let me see it when you get in running hoss

Not sure if Modular Fluffy Project by:Foxhoarder is relevant to your experiments, but it’s definitely worth mentioning here. Crowdsourcing and sharing resources ought to be the future of fluffy games in general.

Also, someone once tried making a fluffy AI based on an emotion circumplex model and letting the fluffies neural connections develop from scratch. For genuine stupidity, at one point it actually had a glitch where the fluffy went to the food bowl but never ate, because somehow it had learned that the act of going to the food bowl was what sated its hunger, not the eating.

I will take a look at that.

I will also check this out. I don’t have much to share at the moment since I’m still working on several systems and while several of them work I wouldn’t consider them finished.

Well it is a simulation. The only outcome that may want to be controled is that it acts like a fluffy.

What you say does ring true of a lot (if not all) game ai now a days, being objective based. But @Anger3D is trying to make a dynamic simulation, which is a bit different.

How can a fluffy stupidly complete an objective like pick up a block? Failing to pick up a block maybe, but that is more a dexterity thing.

To make an ai that is “stupid” we must think what makes other beings stupid.

-

Forgotfulness. Not remembering information correctly or at all which may be useful for completing a task, eg. forgetting the location of an item, like a food bowl.

-

A bad belief/learned wrong. The belief they have on the world is not a correct reflection of the real world, eg. hugs can make physical pain go away.

-

Doing the first thing that comes to mind. Not thinking deep enough to come to a better conclusion, eg. pooping on the floor without thinking deep enough to realize that there is a consequence to that action, especially if that fluffy already has that belief.

All of that is technically able to be programed with some caveats.

Memory can be stored in array, but the more variables you track the more amount of ram you need.

Beliefs can work , but they all have to be pre programed, which means you’ll eventually get the same kind of stupidity twice, probably more.

Acting instinctually would mean you would have to make the ai actually go through multiple things it can do and rank them in an order that makes sense. What does it compare its objective to and in what order?

I don’t think stupidity lies in doing an individual sub objective, like walking or picking up an object, rather than it lies on accomplishing larger tasks with incorrect information.

I was more pointing out that it is difficult to algorithmically produce suboptimal results that would be utilized as part of a ‘stupid’ AI, and that a heuristic approach might be more appropriate. Also the idea of memory being a simple array is kind of a goofy way to put it since you’d need an absurdly large array for even simple simulations it would make the whole thing chug. Your data structures should fit your needs.

Also, beliefs can be acquired through appropriate state transitions and analytic functions. It’s even possible to implement a form of faith using tagged-interaction on top of a fuzzy pattern matching system, providing you make your behavior loop a flexible enough system.